This year I decided to try my hand at JS13k. Being the ambitious chap I am, I picked a genre that might not be the easiest one to fit in one month of free time and 13kB of JS: a strategy game. One of the most important things in a single-player game of this genre is the AI. If the AI is bad, there won’t be any challenge, and if there is no challenge, the gameplay will fall flat on its face as a result. This post is the story of my arduous journey towards getting it right.

It might make sense to play the game before reading on to get a better idea what I’m talking about. Also, you know, because it’s a good game :).

The min and the max

My first reflex was to use the minimax algorithm - which is kind of the default thing you use for turn-based AIs. None of the other approaches seemed to fit better.

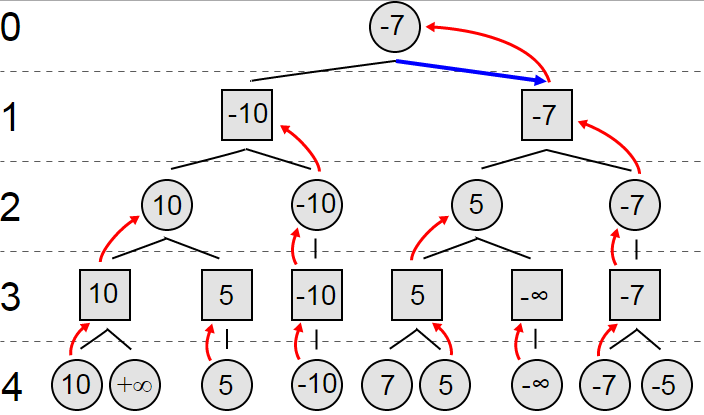

You can click the link above for a full description (check out the animated explanation while you’re at it), but the basic idea of the algorithm is as follows:

Imagine the game state as a giant tree. The root of this tree is the current game state. Every branch represents one possible move, and leads to the resulting game state after making that move.

In addition to the tree, we have an evaluation function - a way to convert the entire game state into a number corresponding to how favorable that state is for a given player.

We analyze the game to a chosen depth (say, 5 moves ahead), and use our evaluation function to calculate a value for the leaves - those are all the possible outcomes after 5 moves.

Next, we start crawling up the tree, figuring out values for the non-leaf nodes:

- if a node represents an opponent’s move, he will pick the branch with the minimal value, to minimize our gains.

- if a node represents our move, we will pick the branch with the maximal value, to maximize our gains.

In this way we propagate values up the tree until we reach the root - at which point we know what’s the best move to make (the move that maximizes the value minimized by our opponents - hence the name minimax).

Exponential explosions

Minimax seemed like a great fit, and evaluating a position in Compact Conflict seemed easy: just count up all the resources you control (regions, temples, soldiers), give them their proper weights (a temple is worth much more than a soldier), and done!

There is one problem in general with using min-max to create a god-like all-predicting AI: the bugbear we all know as computational complexity.

The number of nodes in our processing tree is md, where m is the average number of possible moves each turn, and d is our analysis depth.

This means that every time we want the AI to look just one move further, the whole thing gets m times slower. There are ways to lower this factor a bit (like α-β pruning or progressive deepening), but in general, you can’t get much lower than m/2 with generic approaches.

Still, not a problem as long as m is not too big, right? Well, consider the possible moves in Compact Conflict:

- you can move any army of your choosing

- for each army, we can move any chosen number of soldiers

- once we have the soldiers, they can move to any neighbouring region.

In the mid-game, once you multiply all this together, the number is on the order of five hundred. To illustrate the problem with exponents: in my first implementation, looking 3 moves ahead took the AI about 10 seconds. Looking 4 moves ahead gave you enough time for a full dinner.

After each move.

Creative gardening: pruning the tree

This wasn’t acceptable, so I immediately started looking for ways to curb this number.

I couldn’t make the AI ignore some of its armies. Dropping possible target regions for a move also seemed out of the question. On the other hand, considering all the possible soldier counts seemed like a bit of overkill. Usually, there isn’t that much difference between moving 3 or 4 soldiers out of 7. In the end, I decided to only consider two moves per army: moving all the soldiers, or just half of them.

In complex mid-game states, this could cut the number of possible moves in three, at the cost of making the AI occasionally miss a slightly better move. Since this threefold gain influenced every level of the decision tree, this made the AI two hundred times faster. Such is the power of exponents.

The next step was pruning “suicide moves” - attack against overwhelming forces, which could never be a good move. This was a much smaller reduction (maybe 10%), but in the end, the AI could comfortably think 5 moves ahead in about 10 seconds on my machine. Success, right?

The multiple move blues

Five moves ahead is enough for a decent chess program. It won’t be stellar, but it should avoid big blunders and challenge beginners easily.

The problem is, unlike in chess, in Compact Conflict each player gets 3 moves per turn. This means that looking 5 moves ahead won’t even get you to the end of the following player’s turn.

True to what might be expected in such a situation, the AI made significant progress on its own turn, only to leave key regions undefended against other players - most often those whose moves it never bothered to process.

Since there could be as many as 3 opponents, we would need to consider 12 moves to take all of them into account. If you paid any attention so far, you know that replacing 5 with 12 in the exponent is not a good idea - at least if we want the AI to make a move some time this century.

While I had some more tricks up my sleeve (I didn’t even implement α-β pruning yet), I was doubtful I could get up to 12 moves with just smarter minimax.

Human touch

I hit a dead end, but I wasn’t giving up yet. I took a step back, and thought: “how do I make my decisions during the game?

Obviously, I don’t go through the whole 12-move deep decision tree in my head - if I could, this post would be written in an alien language, and I would be considering invading the Earth. Instead, I found that I mostly consider two factors:

- threat to my regions - can any one of my opponents amass an army big enough to conquer this region, beating my defenders?

- opportunity for conquest - how many regions/temples do I pose a realistic threat to, using the armies I have available?

Thinking about those two things in effect allows me to ignore the exact moves my opponents will make. As long as they pose no threat to me, but I have an opportunity to attack them - what good can they do?

Translating into the AI world, this looked promising - if we could properly include those two factors, maybe we could get by with min-maxing only to the end of our own turn?

It turns out that there was a natural place I could bake those considerations in - the evaluation function. If a region was under threat, I decreased its value in the evaluation proportionally to the size of the threat. On the other hand, regions which we would have opportunity to conquer next turn added a fraction of their value to the evaluation, depending on how likely the conquest was.

The results were impressive. Not only was I able to face a competent AI that didn’t make brain dead mistakes for the first time, it was also faster than the 5-move-deep idiot I had before.

Perfect is the enemy of good

While I made small improvements here and there, this is mostly how the AI stayed until the end of the competition. I could still think of a hundred small things to improve, but decided against it. Why?

At this stage, the AI was workable. It wasn’t smart, but it wasn’t stupid either. Meanwhile, a game has a million components I could work on, and my goal as a game designer isn’t to make the best AI under the sun - it’s to make the player enjoy themselves.

I believe that Amdahl’s law doesn’t hold only for performance optimizations - it also holds for optimizing for fun! Once I got the AI to play reasonably, it was all diminishing returns. I could spend a day improving the AI to make it play 20% better, but would it make the game 20% better for the players?

I figured it wouldn’t, and spent the last four days of the competition working on game balance, the UI, tutorial, and many other things. If you’re wondering if it was the right decision, check the game out!